The Role of NVMe Network Storage for the Future

The COVID-19 pandemic has led to rapid adoption of work-from-home models and, in turn, posed a challenge for the storage industry: finding the right balance between speed, performance, and cost in remote environments.

But the cost of performance and efficiency has always been high. Many players in the storage industry are moving to more efficient, but more expensive, storage hardware such as SSDs, but enterprises still widely use HDDs due to their lower costs.

According to Silvano Gai, author of Build a scalable cloud infrastructure from Pearson, in an interview with TechTarget. Organizations are rapidly adopting NVMe, especially NVMe-oF, according to research by the Enterprise Strategy Group.

“NVMe was created as a reaction to SCSI, as an alternative to SCSI. It had nothing to do technically; it was more [of] a business and industry decision,” Gai said. “The advantage of NVMe over SCSI is that in NVMe there is huge support for parallel operations and pending queue. And that, of course, dramatically increases throughput as you head to SSDs.”

Cloud and remote storage capabilities are also contributing to the rise of NVMe network storage. Large organizations like Facebook and Microsoft have started adopting NVMe cloud storage due to its higher IOPS and lower latency. The protocol will likely expand to the entire industry in the coming years.

about the book.

Storage is “probably the most natural candidate for deaggregation,” Gai writes in the book, as organizations seek to reduce latencies and distribute storage resources more efficiently. Separating compute from storage through disaggregation allows organizations to scale applications without losing performance.

Additionally, as data continues to grow in volume, many have turned to the various NVMe protocol specifications, such as NVMe-oF, as they strive to eliminate inefficiencies and meet their computing needs. ever-expanding storage, without sacrificing performance.

Below is an excerpt from Chapter 6, “Distributed Storage and RDMA Services,” of Build a future-proof cloud infrastructure. In this chapter, Gai discusses remote storage, distributed storage, and the rise of SSDs and NVMe network storage. To see all of Chapter 6, click here.

6.2.4 Remote storage meets virtualization

Economies of scale have prompted large compute cluster operators to consider server disaggregation. Among server components, storage is probably the most natural candidate for deaggregation, primarily because the average latency of accessing the storage medium can tolerate network traversal. Even though the new widespread SSD support greatly reduces these latencies, the evolution of network performance goes along with this improvement. As a result, the trend of storage disaggregation that began with the creation of Fiber Channel has been greatly accelerated by the growing popularity of high-volume clouds, as this has created an ideal opportunity for consolidation and cost reduction.

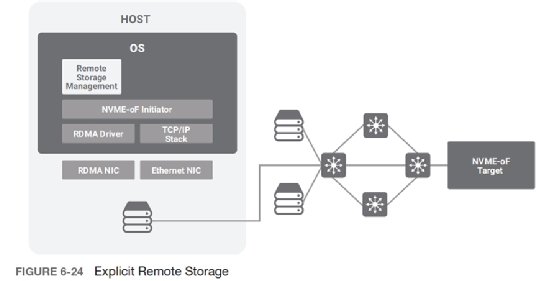

Remote storage is sometimes explicitly presented as such to the client; see Figure 6-24. This mandates the deployment of new storage management practices that affect how storage services are offered to the host, as the host’s management infrastructure must manage the remote aspects of storage (access permissions remote, mounting a specific remote volume, etc.).

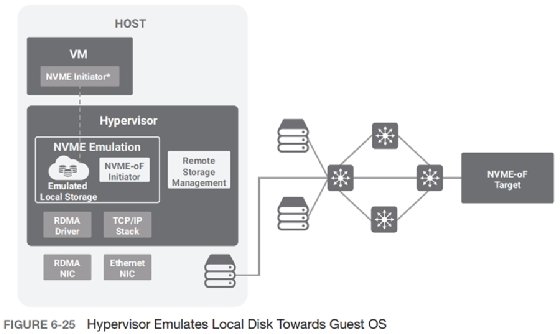

In some cases, exposing the remote nature of the storage to the customer can present challenges, especially in virtualized environments where the tenant manages the guest operating system, which presupposes the presence of local disks. A standard solution to this problem is for the hypervisor to virtualize the remote storage and emulate a local disk to the virtual machine; see Figure 6-25. This emulation encapsulates the paradigm shift from the perspective of the guest operating system.

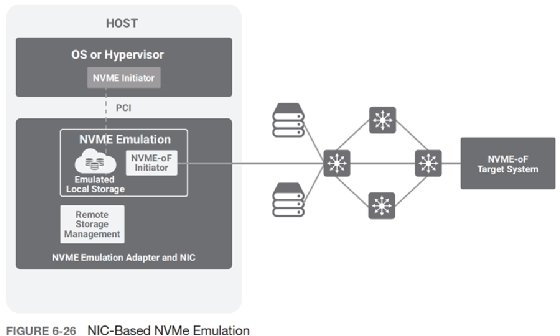

However, hypervisor-based storage virtualization is not a suitable solution in all cases. For example, in bare metal environments, tenant-controlled operating system images run on the actual physical machines without a hypervisor to create the emulation. For such cases, a hardware emulation model becomes more common; see Figure 6-26. This approach presents the physical server with the illusion of a local hard drive. The emulation system then virtualizes access over the network using standard or proprietary remote storage protocols. A typical approach is to emulate a local NVMe disk and use NVMe-oF to access remote storage.

A proposed way to implement the data plane for disk emulation is to use so-called “smart network cards”; see section 8.6. These devices typically include multiple programmable cores combined with a NIC data plane. For example, Mellanox BlueField and Broadcom Stingray products fall into this category.

Enterprise Strategy Group is a division of TechTarget.

Jen English, editor of TechTarget, contributed to this report.

Comments are closed.