Intel patent confirms work on multi-chip-module GPUs

A recent patent published by Intel (via sub fox) may be the keystone of his future graphics accelerator designs – and he uses the multi-chip module (MCM) approach. Intel describes a series of GPUs working in tandem to deliver a single image. Intel’s design is oriented towards a hierarchy of workloads: a main graphics processor coordinates the entire workload. And the company sees the MCM approach as a whole as a necessary step to steer silicon designers away from the manufacturing, scalability, and power delivery issues that come with increasing chip size in the eternal search. performance.

According to the Intel patent, multiple graphics drawing calls (instructions) are routed to “a plurality” of graphics processors. Then the first GPU essentially runs an initial drawing pass of the entire scene. At this point, the GPU is simply creating visibility (and obstruction) data; it’s about deciding what to render, which is a high-speed operation to do on modern GPUs. Then a number of tiles generated in this first pass go to the other available GPUs. According to this initial visibility pass, they would be responsible for accurately rendering the scene corresponding to their tiles, which indicates which primitive is in each tile or shows where there is nothing to render.

So it looks like Intel is considering integrating tile-based checkerboard rendering (a feature used in today’s GPUs) alongside distributed vertex position computation (out of the initial frame pass ). Finally, when all the GPUs have rendered their piece of the puzzle which is a single image (including shading, lighting and ray tracing), their contributions are put together to present the final image on screen. Ideally, this process would occur 60, 120, or even 500 times per second. Intel’s hope for multi-die performance scaling is thus laid bare before us. Intel then uses performance reports from AMD and Nvidia graphics cards running in SLI or Crossfire modes to illustrate potential performance increases in typical multi-GPU configurations. But, of course, it will always be inferior to a genuine MCM design.

However, Intel’s patent is fuzzy in detail as to the level of architecture and covers as much ground as possible – which, again, is customary in this space. For example, it allows designs that even include multiple GPUs working in tandem or only GPU sections. The method applies to “a single-CPU desktop system, a multi-CPU workstation system, a server system”, as well as within a mobile system-on-chip (SoC) design. These graphics processors or embodiments, as Intel calls them, are even described as accepting instructions from RISC, CISC, or VLIW commands. But Intel seems to be taking a page straight from AMD’s playbook, explaining that the “hub” nature of their MCM design could include a single die grouping memory and I/O controllers.

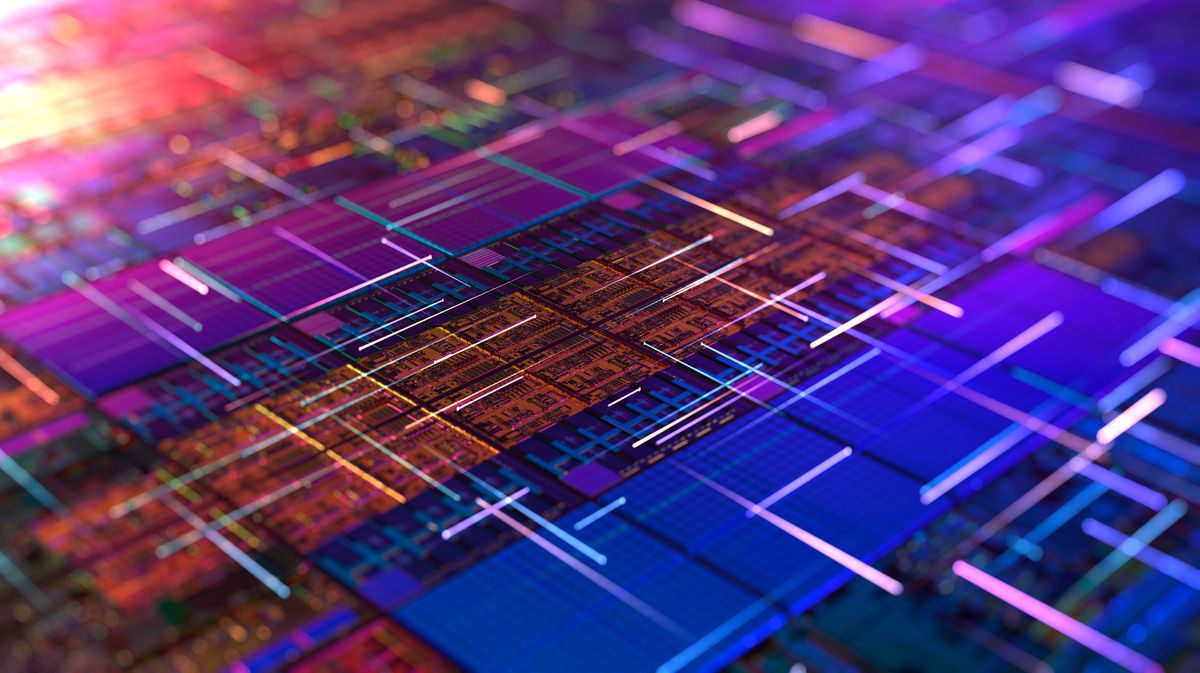

As the rate of semiconductor miniaturization slows (and continues to slow), companies must find ways to increase performance while maintaining good yields. At the same time, they must innovate on the architecture, the manufacturing processes of semiconductors become more and more complex and exotic, with a higher number of manufacturing steps required, a higher number of masks, and finally integrating applications of extreme ultraviolet lithography (EUV). We’ve been riding the diminishing returns part of the equation for some time now: it’s getting harder and harder to increase transistor density, and increasing die areas would incur penalties on wafer yields. The only solution is to pair several smaller dies together: it’s easier to have two working 400mm square dies than to have one fully functional 800mm.

AMD, for its part, has had great success with its MCM-based Ryzen processors since their first generation. The red company still provides MCM-based GPUs, but their next-gen Navi 31 and Navi 32 may feature this technology. And we know that Nvidia is also actively exploring MCM designs for its future graphics products, following its new Composable On Package GPU (COPA) design approach. The race has been on for a long time, even before AMD released Zen. The first company to deploy an MCM GPU design should have an edge over its competitors, with higher efficiencies facilitating higher profits – or lower market prices. And with all three AMD, Intel, and Nvidia subcontracting the same TSMC manufacturing nodes for the foreseeable future, every slight advantage could have a potentially high market impact.

Comments are closed.